|

Currently, Jiali Chen is the third-year direct Ph.D. student at Key Laboratory of Big Data and Intelligent Robot of South China University of Technology (SCUT) and at Hong Kong Polytechnic University (PolyU), supervised by Prof. Yi Cai, Prof. Qing Li and Prof. Wenqi Fan. He works closely with Dr. Jiayuan Xie at Hong Kong Polytechnic University (PolyU). Before that, He also obtained the B.E. degree in Department of Software Engineering from South China University of Technology (SCUT) in 2023. His research interests revolve around Multimodal Reasoning, MLLM Post-training and Causal Inference. Feel free to contact me if you're interested in discussing potential collaborations or partnering on research projects. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

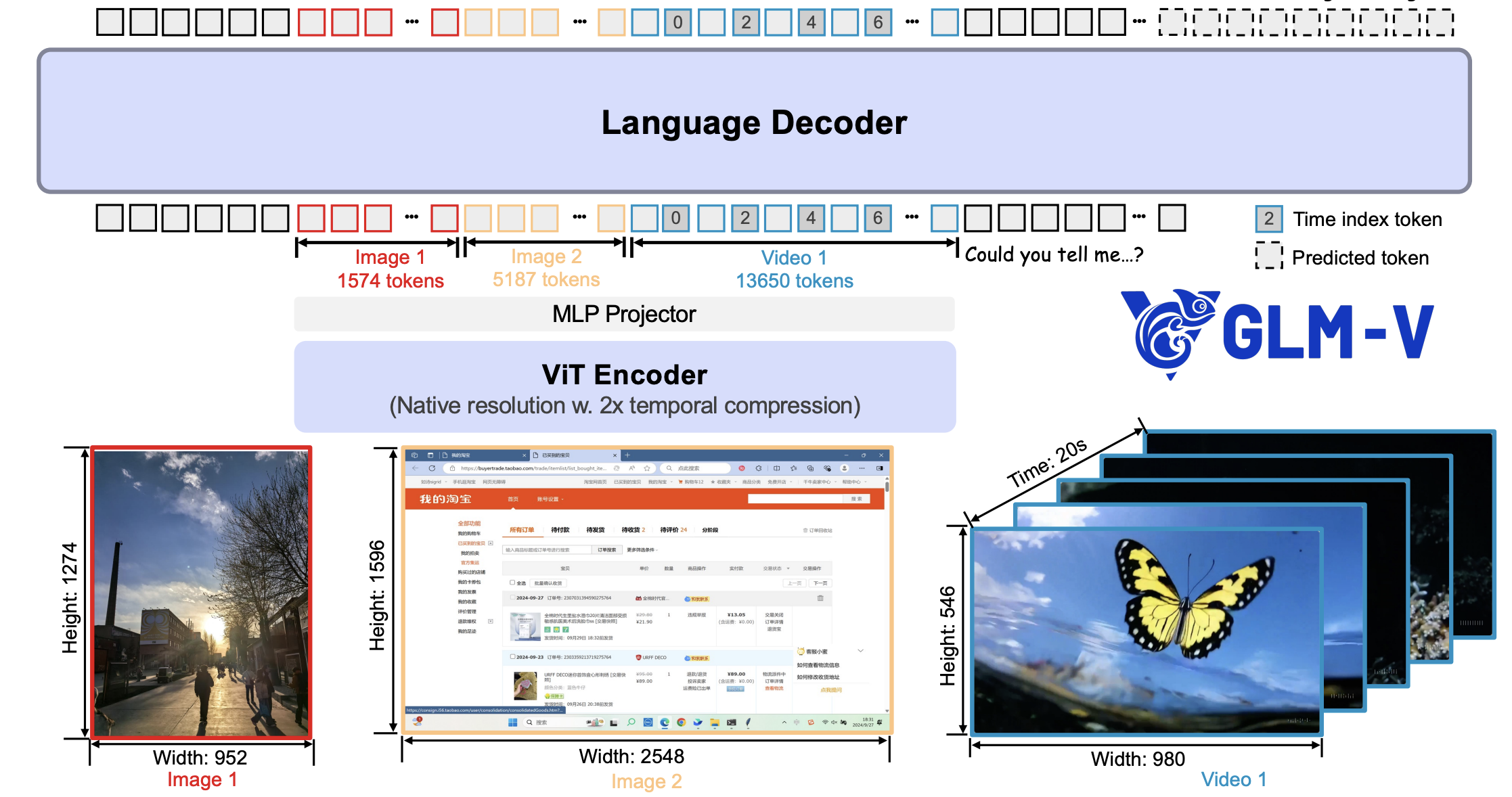

GLM-4.5V and GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning

Zhipu AI, GLM-V Team [Technical Report], [Github], [GLM-4.1V-9B-Thinking], [GLM-4.5V] New open-source VLM model GLM-4.1V-9B-Thinking, designed to explore the upper limits of reasoning in vision-language models. GLM-4.5V is based on ZhipuAI’s next-generation flagship text foundation model GLM-4.5-Air (106B parameters, 12B active). |

|

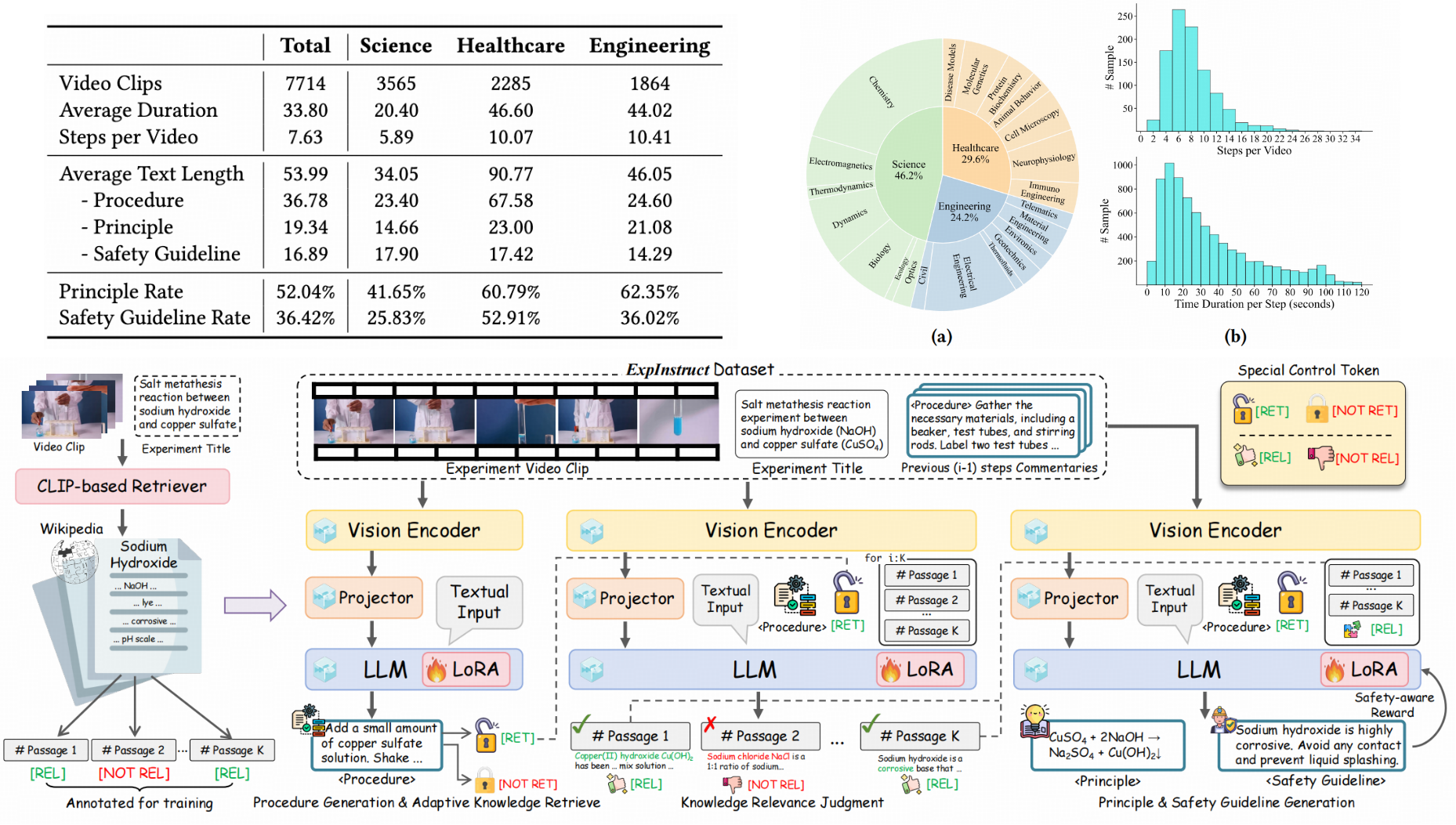

ExpStar: Towards Automatic Commentary Generation for Multi-discipline Scientific Experiments

Jiali Chen*, Yujie Jia*, Zihan Wu, Jinyu Yang, Jianpeng Chen, Xusen Hei, Jiayuan Xie, Yi Cai, Li Qing ACM Multimedia, ACM MM 2025 [Arxiv], [Code], [Project Page] Area: MLLM, Video Understanding, Adaptive Knowledge Retrieval We present ExpStar, a LMM tailored for automatic commentary generation in multi-discipline scientific experiments, equipped with adaptive knowledge retrieval and safety-aware optimization. |

|

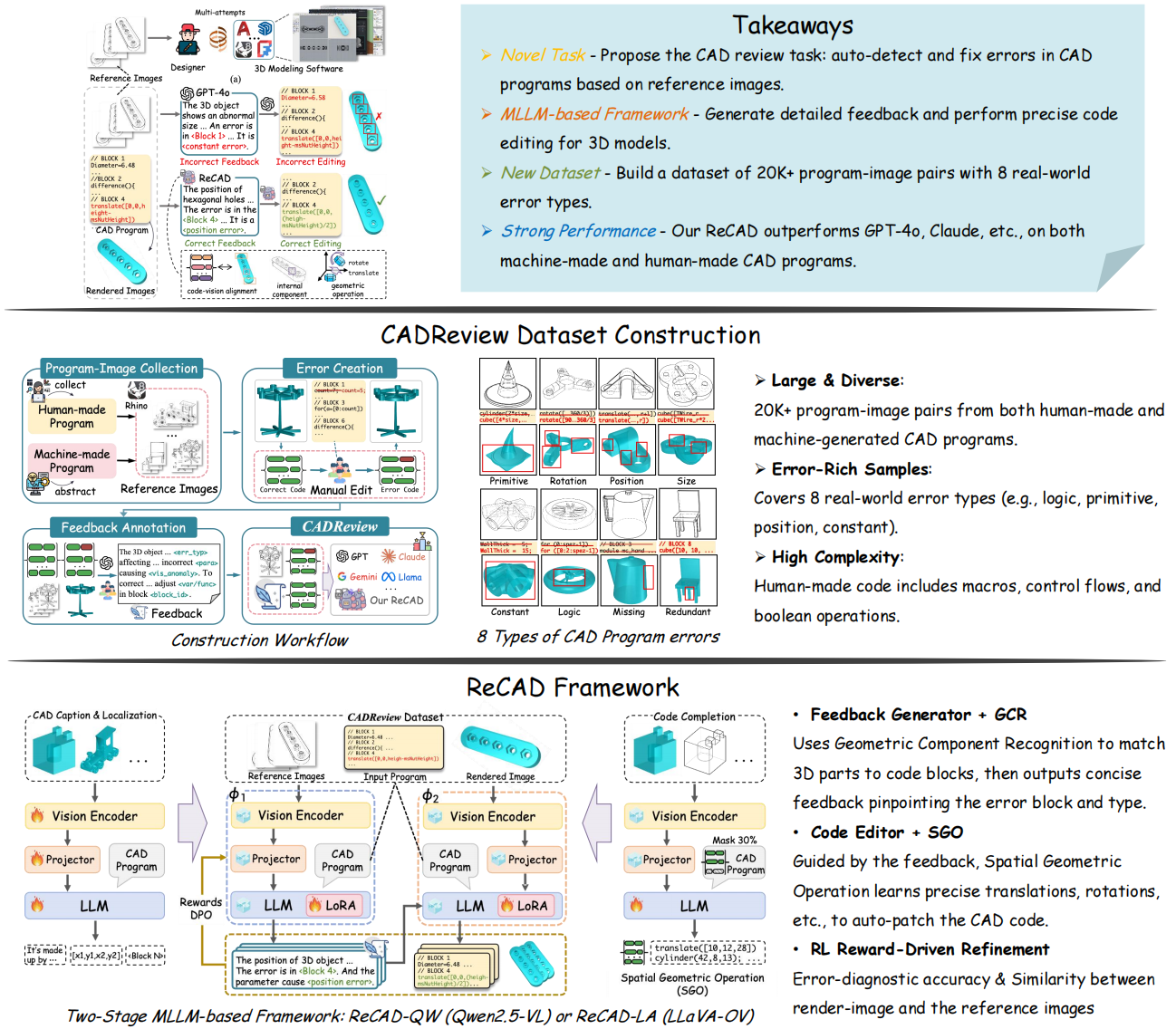

CADReview: Automatically Reviewing CAD Programs with Error Detection and Correction

Jiali Chen*, Xusen Hei*, Hongfei Liu, Yuancheng Wei, Zikun Deng, Jiayuan Xie, Yi Cai, Qing Li Main Conference of Annual Meeting of the Association for Computational Linguistics, ACL 2025 Oral [Arxiv], [Code], [Project Page] Area: MLLM, CAD Modeling We propose ReCAD, a MLLM-based framework for automatic CAD program error detection and correction. It enables MLLMs to achieve precise code-vision alignment with geometric component recognition and spatial operation mechanisms. Our newly introduced CADReview dataset and experiments show ReCAD significantly outperforms existing MLLMs in CAD error correction. |

|

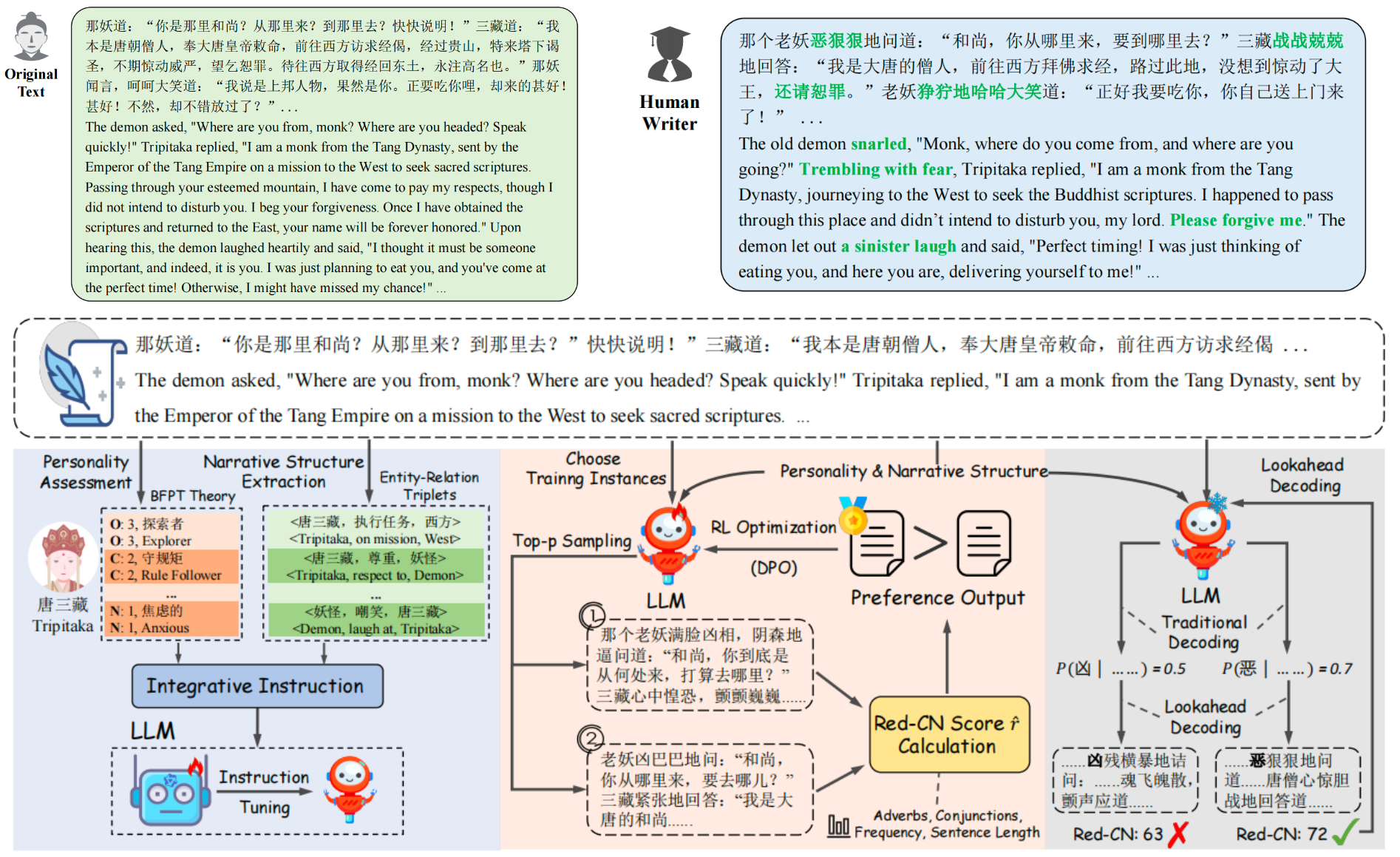

Classic4Children: Adapting Chinese Literary Classics for Children with Large Language Model

Jiali Chen, Xusen Hei, Yuqi Xue, Zihan Wu, Jiayuan Xie, Yi Cai Findings the Nations of the Americas Chapter of the ACL, NAACL 2025 [Paperlink], [Code] Area: Large Language Model, Text Style Our proposed InstructChild explicitly leverages children’s reading preferences to guide the LLM in generating child-friendly text for children. We also construct Classic4Children dataset for a comprehensive evaluation. |

|

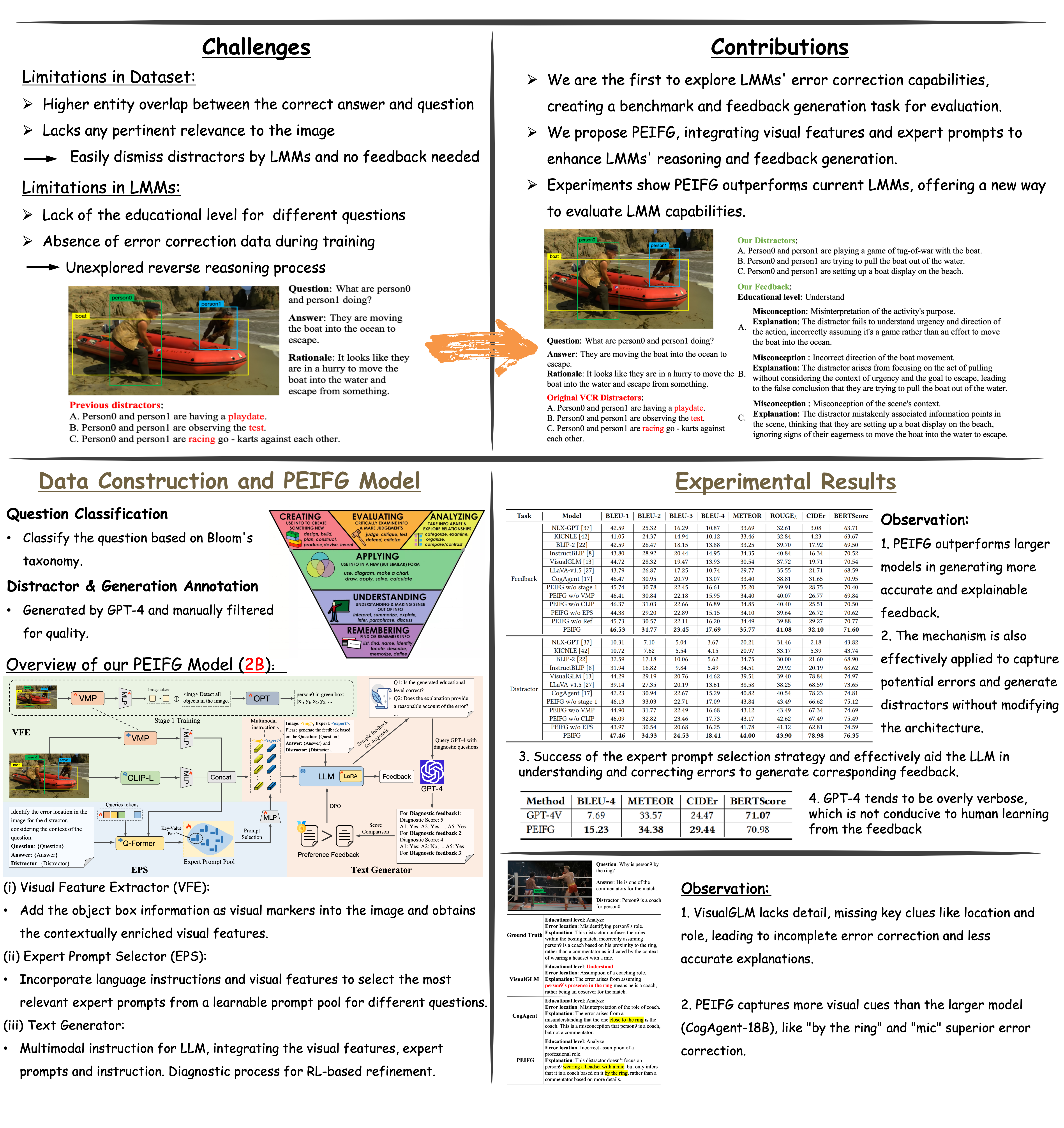

Learning to Correction: Explainable Feedback Generation for Visual Commonsense Reasoning Distractor

Jiali Chen, Xusen Hei, Yuqi Xue, Yuancheng Wei, Jiayuan Xie, Yi Cai, Qing Li ACM Multimedia, ACM MM 2024 [Paperlink], [Code] Area: Large Multimodal Model, New Benchmark We present the work to investigate the error correction capabilities of large multimodal models (LMMs), construct a new benchmark and introduce the feedback generation task for evaluation. I would like to extend my heartfelt gratitude to my girlfriend, Ms. Wen, for inspiring the idea behind this paper. |

|

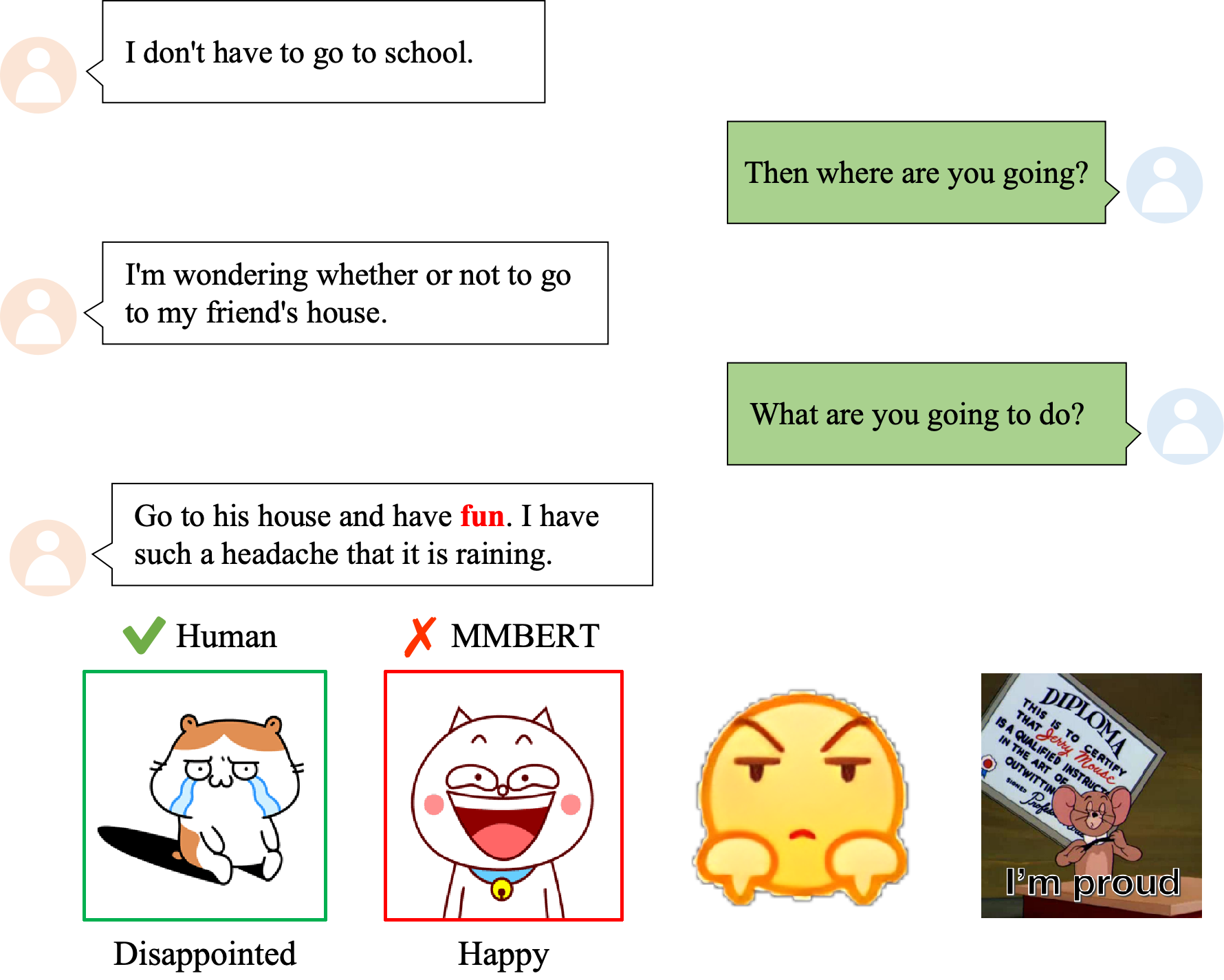

Deconfounded Emotion Guidance Sticker Selection with Causal Inference

Jiali Chen, Yi Cai, Ruohang Xu, Jiexin Wang, Jiayuan Xie, Qing Li ACM Multimedia, ACM MM 2024 [Paperlink] Area: Bias, Causal Inference, Sticker Selection This paper presents a Causal Knowledge-Enhanced Sticker Selection model that addresses spurious correlations in sticker selection by using a causal graph and a knowledge-enhanced approach. |

|

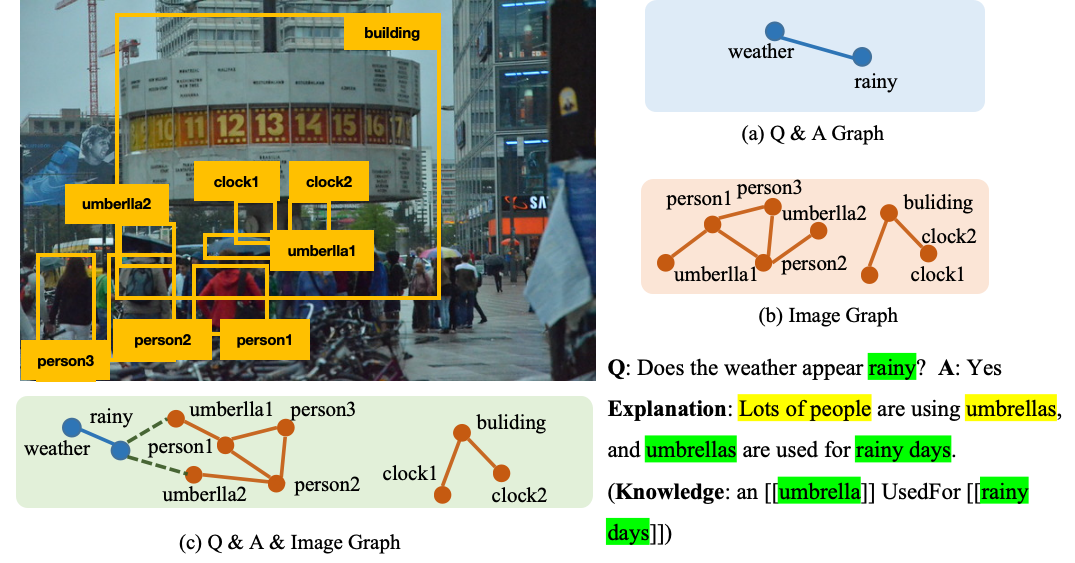

Knowledge-Augmented Visual Question Answering with Natural Language Explanation

IEEE Transactions on Image Processing, TIP 2024 [Paperlink], [Code] Area: VQA, Multimodal Reasoning We introduce KICNLE, which generates consistent answer and explanation with external knowledge. |

|

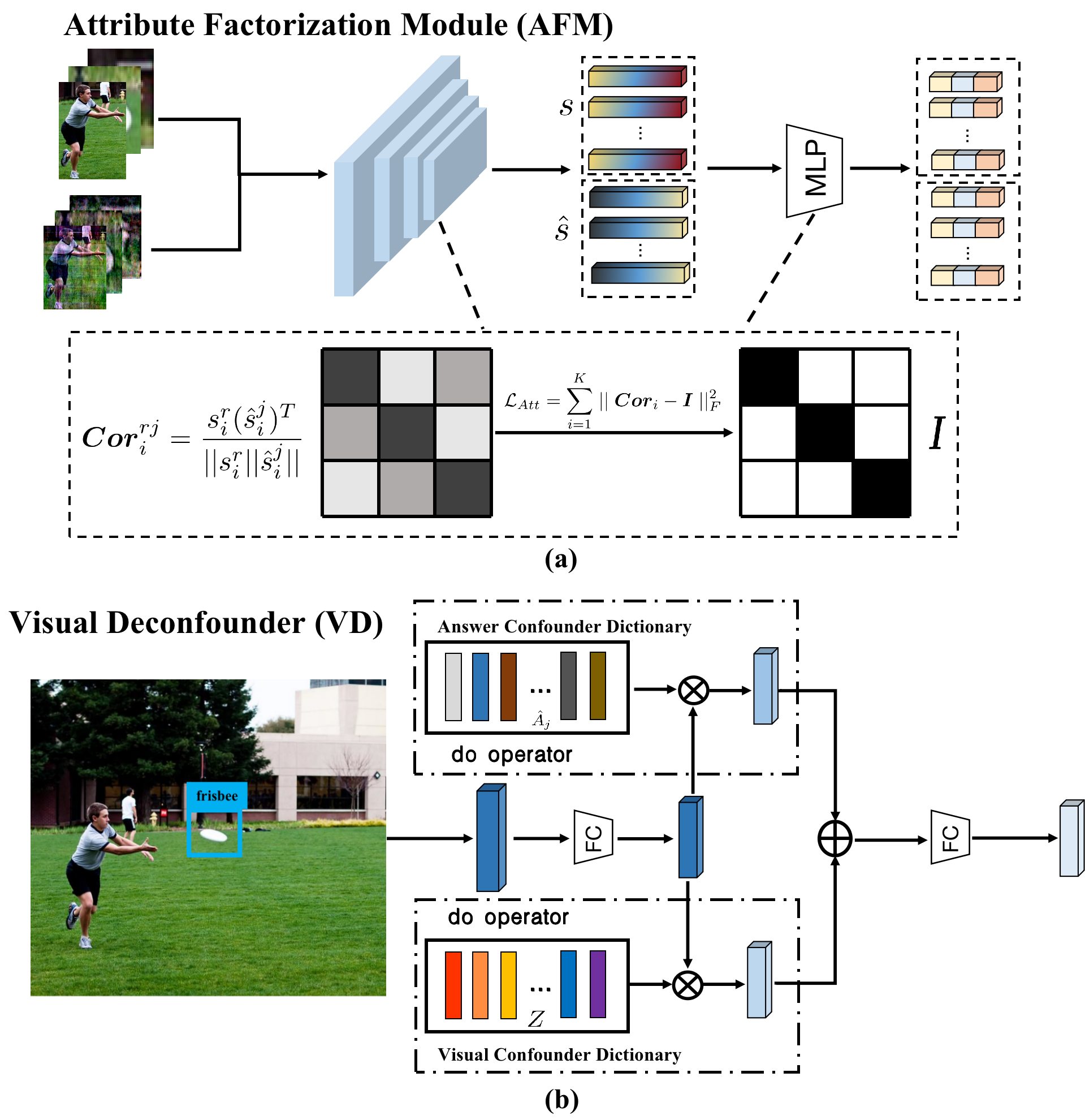

Deconfounded Visual Question Generation with Causal Inference

Jiali Chen, Zhenjun Guo, Jiayuan Xie, Yi Cai, Qing Li ACM Multimedia, ACM MM 2023 [Paperlink], [Code] Area: Bias, Causal Inference, Visual Question Generation We identify previous models frequently learn highly co-occurring object relationships and attributes, which is an inherent bias in question generation. This study first introduces a causal perspective on VQG and adopts the causal graph to analyze spurious correlations among variables. We propose KECVQG mitigates the impact of spurious correlations for VQG. |

|

|

|

|

|

Last updated on Jul, 2025

This website template borrowed from [here]

|